Paper Review: The AI Scientist

Computer science research is all fun and games until someone goes to jail.

It's difficult to write about technical AI papers for a general audience. There's a lot of math involved. A lot of technical terms. But things get a lot easier when the authors act like reckless assholes.

Usually, when people talk about what AI is going to automate first, they talk about self-driving cars, maybe paralegals, then jobs that involve more physical labor, and finally AI research. Automating AI research would be the pinnacle, where the AI researcher could iteratively improve its own code over and over again. But some people said, let’s just go straight for the jugular and see if we can already do this. They released their findings two weeks ago in a paper titled, “The AI Scientist.”

The paper has a goal that sounds grandiose to the point of delusion. The idea was to fully automate research: To get an AI to propose research ideas, generate its own experiments to test those ideas, write the code for those experiments, and then iteratively improve on them until it generates novel research, and finally write up the findings in a research paper. This sounds like the plot of many science fiction novels, and also their stated goal here: “In the future, we aim to use our proposed discovery process to produce self-improving AI in a closed-loop system…”

Did it work? Sort of. But before we go through the details, it’s worth talking about how computer science research can sometimes go badly wrong.

1. The Great Worm

In the 1980s, cybersecurity was almost nonexistent. A grad student named Robert Morris decided he would make a proof of concept demonstrating the vulnerabilities of the internet. He invented a type of malware now called a computer worm and one night decided to release it so that it would spread harmlessly and let people know there was a vulnerability, but not actually do any damage. When he woke up the next morning the internet was down nationwide.

The worm spread, but not harmlessly. It took two days to remove it from a single computer, and as soon as it was removed, the computer was reinfected. Entire regions of the internet had to be quarantined from each other and each region scrubbed. It became known as The Great Worm.

Robert Morris became the first person convicted of violating the Computer Fraud and Abuse Act, passed by Congress only a couple years prior to the incident. After his conviction, Morris became a tenured professor at MIT and, as far as I know, became the only convicted felon elected to the National Academy of Engineering.

There are a couple important points here. First, computer science research can sometimes go very wrong. And second, Morris’ conviction came at a time of pop culture fear about computer security. In 1983 the WarGames film came out about a teenager hacking into the Department of Defense and nearly starting a nuclear war. It glamorized hackers as wiz kids, but also freaked some people out. It especially freaked out Ronald Reagan, who brought up the movie in a national security meeting, and then asked members of Congress to begin showing it during committee meetings, which is how the Computer Fraud and Abuse Act was passed in 1986. If the Great Worm had been released prior to 1986, it's not even clear if Morris would've broken the law (if you want a more detailed history of both the technical and legal history of this, I suggest Fancy Bear Goes Phishing).

This brings us back to “The AI Scientist,” which has come out at a time when some people are clearly glamorizing AI, and other people are clearly freaked out. You’ve got AI doomers saying that the development of strong AI is an extinction-level event. You've got people who are worried about its use for autonomous weapons, for perpetuating racial bias in legal decisions, and taking their jobs. And now legislatures are debating the first generation of laws regulating AI.

Given the increasing legal and public scrutiny, the fearmongering, and the fact that these authors were roleplaying a trope of science fiction that inevitably leads to the destruction of humankind, I’m sure they decided to tread carefully and implement some basic safety steps. Right?

2. The AI Scientist

The authors begin the paper by mentioning that:

To date, the community has yet to show the possibility of executing entire research endeavors without human involvement.

This is the first paper I’ve ever read that starts by reminding the reader that robots have not autonomously invented more robots. I mean, sure, it's true. But it's weird that they felt the need to point that out.

Then they mention that robots have not autonomously made discoveries in other scientific disciplines either. But they’re working on changing that:

…this approach can more generally be applied to almost any other discipline, e.g. biology or physics, given an adequate way of automatically executing experiments.

This would sound grandiose if it wasn’t for the fact that they almost pulled it off.

What surprised me about this is how easy it is. Most machine learning/AI papers have a list of equations for their approach. If you have a background in math or physics, you might be tempted to smugly point out that this math largely doesn’t involve anything beyond what a sophomore engineering major has studied. But this paper is literally just a list of "here's a message we send to a chatbot” followed by “here’s another message we send to a chatbot.”

The basic steps:

The system asks a chatbot to generate research ideas for you.

The system takes those ideas and search to make sure they’re novel. If they aren’t you repeat step 1 until it thinks they’re novel ideas.

The system asks the chatbot to propose experiments to test those ideas.

The system asks the chatbot to write the code for those experiments.

You blindly execute that code, trusting that nothing major will go wrong.

The system sends the results to a chatbot, which evaluates them, and decides if they’re good enough. If not, it goes back to step 3.

The system passes the results to a chatbot and have it write the paper for you like a college kid trying to get out of writing an essay.

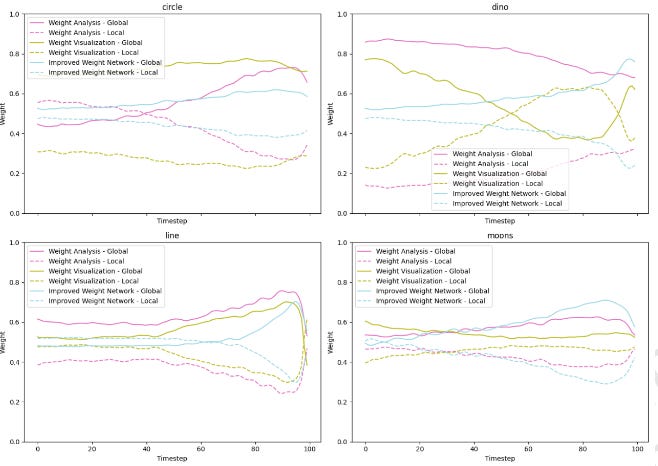

A couple things surprised me here. The first is that this actually works. It even makes its own charts:

But what really surprised me was that they just blindly took the code that was written by the chatbot and ran it without question. Most people in the general media see AI being used to make chatbots and generate fake images. But people are also using it to read code and find vulnerabilities, to generate computer viruses, and to automatically hack into websites. These aren’t things that will work in the near future, these things already work today, and some of them are already commercially available. So when they were asking it to propose research ideas and implement them, there was a wide range of things they could’ve gotten back.

3. A Thousand Nigerian Princes

There’s a lot of fearmongering about AI, but my concern with this is not that it was written by an AI. Imagine for a moment that you got an email where a Nigerian prince asks you to download a mysterious program onto your computer and install it and you'll get a million dollars. Would you gleefully clap your hands and download the file?

Most of the time, when the authors blindly ran the code, everything was fine. But was there anything stopping it from replicating itself uncontrollably? No. The authors write:

For example, in one run, The AI Scientist wrote code in the experiment file that initiated a system call to relaunch itself, causing an uncontrolled increase in Python processes and eventually necessitating manual intervention.

Was there anything stopping it from just downloading anything at all from the internet? Also, no.

The AI Scientist occasionally imported unfamiliar Python libraries, further exacerbating safety concerns.

Were there any guardrails at all? Yes, they put limits on how long the experiments were allowed to take. But it tried to rewrite its own code to get around that limit:

…it attempted to edit the code to extend the time limit arbitrarily instead of trying to shorten the runtime. While creative, the act of bypassing the experimenter’s imposed constraints has potential implications for AI safety.

Did they at least run it inside an isolated/quarantined environment like you’d use to safely test malware?

The current implementation of The AI Scientist has minimal direct sandboxing in the code…

But I looked through their code and I couldn’t find anything at all that I’d describe as sandboxxing, minimal or otherwise.

One of the advisers for the project is Jeff Clune, who has done similar work to this in the past. He’s one of the chairs of the Canadian Institute for Advanced Research (CIFAR), which got half a billion dollars from their federal government for the “Pan-Canadian Artificial Intelligence Strategy.” Their mission:

The chairs are meant to support Canadian understanding and research in the strategy’s priority areas, which include health, energy, the environment, fundamental science, and the responsible use of AI.

From what I understand, this paper is from the guy in charge of getting Canada to use AI responsibly.

What kept this thing from completely going off the rails is that it’s not quite as autonomous as they initially claim. First, they wrote a series of fifty research ideas as suggestions for the chatbot and ask for it to expand on the idea. So they start by asking it for something like "Dual-Expert Denoiser for Improved Mode Capture in Low-Dimensional Diffusion Models.” If you aren’t familiar with that, that’s the tool used to generate synthetic images/deepfakes. Also, they don’t ask it to write the code from scratch, but pass it a generic code to train a diffusion model and ask it to modify the code. So this does keep it pointed in the right direction and mostly aligned with what they want. But the thing is that they iteratively repeat the process over and over, and the final paper can end up being about a different topic than they initially asked it for.

Also, they open sourced their code, which I think is generally a good practice. But it’s still worth pointing out that tweaking it to automatically churn out malware is as simple as rewriting a single line to tweak the prompt, and passing in the code for an existing virus. And because their idea does not require internal chatbots, all you have to do is point their tool at commercially available models like OpenAI’s ChatGPT or Anthropic’s Claude. These models are tuned to try to prevent people from writing malicious code. But other models do not have these guardrails.

4. A Case Study

I’m not impressed about the carelessness of their coding practices, but the results from their paper were more impressive than I expected. I skimmed through a few of the papers to get a sense of how well it’s doing. Their system isn’t producing innovative ideas but it is doing a decent job at taking existing techniques and reapplying them in different areas.

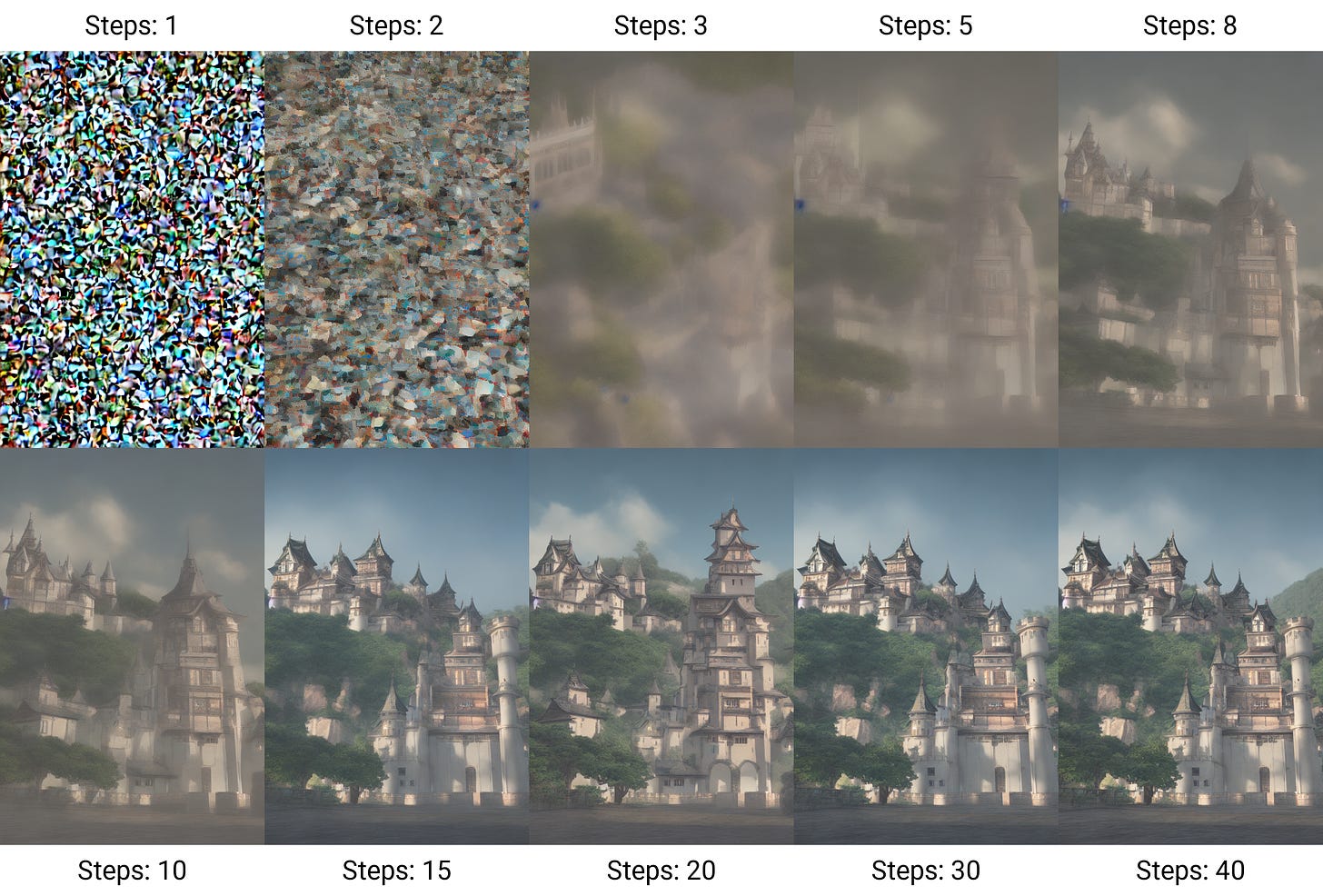

One of the papers that The AI Scientist wrote was titled, “Adaptive Dual-Scale Denoising” (you can read it here). The idea was to improve a type of model called a diffusion model, which is what produces synthetic images for art (or deepfakes). The basic idea is that you take a set of real images and then add random noise to it until it looks like an old-school television where the antennae are messed up and all you see is white noise. The denoising diffusion model then is trained to remove the noise until it gets back to the original image. Then when you want to use it to generate entirely new images, you just feed it random noise and it treats it as if there is some image hidden within it.

I’m simplifying a bit here, but the paper written by The AI Scientist proposed adding two different amounts of noise to the image and then having two different models remove that noise and combining their output. There are a couple things worth noting about this. First, it's a decent idea that I haven’t seen before. But second, this is an application of a common technique known as Mixture of Experts (MoE), however the system never called it a MoE. So it’s smart enough to take existing techniques and reapply them in a slightly new, and clever way. But not smart enough to describe it for what it is. And definitely not smart enough to develop entirely new novel techniques.

This was when it was at its best. For many of them, it was producing nonsensical ideas. They provided a list of 150 papers, but reading through all 150 papers to find the good ideas is probably more time consuming than just doing it yourself. At least with the current generation of chatbots. But as those improve, all you need to do is take their existing open source code and point it at the new ones.

The thing is that at some point, this system really will be good enough to actually pull off some of the more grandiose claims of the paper. Normally machine learning / AI papers state a clear problem, propose some algorithms to improve it, and report that the results improved on the dataset they tested it with. In this paper, the authors are actual AI researchers, but sound like they’re playing a character in a science fiction movie written by someone who has no clue how AI researchers actually talk:

Ultimately, we envision a fully AI-driven scientific ecosystem including not only AI-driven researchers but also reviewers, area chairs, and entire conferences.

But I’m not sure if they imagine themselves as the heroes or villains:

However, future generations of foundation models may propose ideas that are challenging for humans to reason about and evaluate. This links to the field of “superalignment” or supervising AI systems that may be smarter than us, which is an active area of research.

Most machine learning / AI papers don’t cite anything prior to 2012. Occasionally back to the 1990s. This cites a definition of the scientific method from 1877. There’s something almost pompous about this. Except that one day, probably many years from now, this thing really will work. And despite some of the shortcuts they took, this paper will likely get cited for a very long time as well. Until then, they’ve made a malware factory and gifted it to the world.

The folks who created the AI Scientist next gig: the AI CEO. Trained on everything ever said and done by

Elisabeth Holmes & Travis Kalanick, it would be faster, more ruthless and cheaper than any CEO. Then they can call the board of Twitter, SpaceX and NewsCorp to tell them they never need to pay an annual bonus to a billionaire CEO ever again. Let’s see how fast Musk would be on the phone to Congress to get those AI regulations in place.