I’m very curious about what historians a century or two from now will focus on when they talk about the early twenty first century. Usually, when historians look back at periods from past centuries, they tend to focus on things like major geopolitical events, technological developments with major cultural or economic impacts, or demographic shifts like immigration or peasant rebellions or the growth of towns in the fourteenth century. I have a feeling they won’t care about many of the social media outrages that consume most of our news cycle.

So what will they focus on? If I had to guess one technological, one cultural, and one geopolitical area of focus, it would be:

the industrial revolution with AI and networked communications

the shift in Western culture from Christianity as the dominant religion for the past millennium to a New Puritanism

our geopolitical shift from Pax Americana to a more multipolar world

To start the year off, I’m going to make a few predictions related to the first two things. I’ll let other people talk about the geopolitics.

I. A Brief History of LLMs

In 2017 the Transformer architecture was first developed for translating between languages. Then in 2018, OpenAI took that architecture and trained it on a massive dataset called Common Crawl, which is basically just a snapshot of the internet. Rather than translating from language into another, it was just for generating whatever language it was fed. They called this Generative Pretrained Transformers (GPT). At the time this was a huge model. When I heard that it had 117 million weights I remmeber laughing with a sort of giddiness at the thought a model so large.

Then in 2019, they came up with a followup, GPT-2. There was nothing really different about GPT-2 except that the model was 10x larger at 1.5 billion. Then in 2020 GPT-3 was 100x larger than that at 175 billion. Now we have GPT-4, which is rumored at 1.8 trillion. The details of the architecture for this are not publicly released, but I don’t think it’s fundamentally different, just bigger. It’s about ten thousand times larger than the original GPT just five year earlier.

Most of the work was engineering, rather than scientific. The amount of electricity to train the largest models is compared to the electrical consumption of Sweden. It’s a non-trivial engineering challenge to train one of these largest models

Why does this matter?

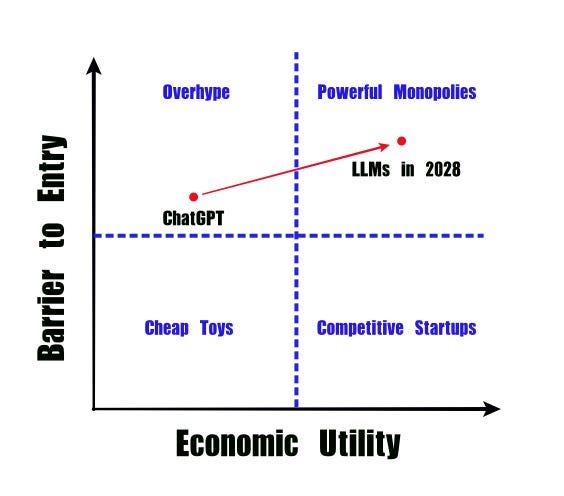

If the models just keep getting larger, then the barrier to entry for world class models will continue to rise, locking out competitors. If this happens, then the models will be consolidated with a few large players. Let’s look at both axes of this:

I think that AI will have a huge long-term economic impact. But at the moment, it’s grossly overhyped. Billions of dollars are being poured into this, but ChatGPT is hemorrhaging cash. The industries that it’s automating, like writing, aren’t terribly profitable.

One) Will there be any GDP growth that economists broadly attribute to AI in the next year? I’m giving this only 10%. I’m going to default to this manifold market to resolve whether I’m correct or not.

Two) Will there be a change in unemployement that economists attribute to AI in the next year? 10%. I’d put unemployment in the US over the next December, 2024 at < 4.1%. And if we’re higher, it won’t be due to AI. I’ll just default to what that manifold market says to keep me honest on whether I’m right or not.

Companies are investing tens of billions into LLMs and so far I’m not aware of single success story, at least from a business perspective.

Three) Will there be an AI Winter in 2024? 30%. I’d quantify this as a simultaneous decline in the NVIDIA stock and a decrease in machine learning jobs. There’s still a lot of hype. But there’s going to be a lot of failed AI startups in the next year. Plus, the interest rates at the moment make VC investments less alluring. Microsoft is hemorrhaging cash by hosting ChatGPT. There’s been a massive investment in Anthropic and other AI companies. They don’t seem to have clear ideas on how they’re going to generate profit. You can have LLMs generate millions of dollars in revenue, but if there’s tens of billions of investment, it’ll still be seen as a failure and we can have a bubble burst.

I’m not putting this higher than 30% because I think the markets can stay irrational until 2025.

From a geopolitical perspective, if a Transformer-based model that only works with trillions of parameters achieves AGI, then that will create a barrier to entry so expensive that only large corporations and nation-states will be able to compete with. This will lead to a world where power is increasingly consolidated in a small handful of corporations and nations. I’m increasingly, but cautiously, optimistic that this is not the world we’re heading towards. In the most extreme case, if one model was dominant, then API access to that model would become a geopolitical tool as important as say, cutting off an oil pipeline to a country.

There are some viable alternatives to the Transformer coming out. They’re smaller, but not as well tested. But if they are as competitive as their authors claim, then that means we have a path forward that scales very differently. I might write up a longer piece on this.

Four) Non-Transformers will creep into the HuggingFace leaderboard. I bet that they’ll break into the top 10 on HuggingFace leaderboard at the 3-billion parameter level. Transformers have been state of the art each year for seven years consecutively now, but I think the wind is changing. The largest models still take a long time to train and the best models at over 7B will still be Transformers.

I think there’s overhype at the moment. But the long run is still in favor of increased economic utility from these models. And nation-states don’t know how to build them. This is a weird situation. Imagine if there is a massive economic utility to these things in three to five years. And European countries are dependent on a technology they’re unable to produce themselves. I have no idea how they’re going to react to this. Do they try to nationalize some startups? Increase investment? I think they’ll turn to purchasing them from corporations.

Earlier in the year I made some forecasts on sovereign power and LLMs. I think there’s evidence that I got them right the first time:

Five) 70% that by the end of 2024 someone will create a nationalized sovereign LLM. It seems that there’s now a startup providing exactly this service. I don’t believe they’ve done it yet though. I still think that nation-states are going to jump on the hype train on this and try to get their own. But they won’t be able to build their own, they’ll have to buy it.

Six) That one nation will cutoff another as part of a boycott. I should specify that this has already happened in one sense. The US cut off Russia and then afterwards ChatGPT was grandfathered into those sanctions. But it was sanctions first, then ChatGPT came along afterwards. This will only really happen if a centralized LLM becomes dominant with smaller countries unable to compete, and LLMs become important to the economy. I put this at 10% before. I’m keeping it there.

And one last AI prediction:

Seven) Google will beat OpenAI/Microsoft by the end of 2024: 60%. Google has been slow, but they’ll dominate over OpenAI. Google is like a large ship trying to compete with a speedboat. They take a long time to turn and navigate, but when they get going they’re going to win.

II. Cultural Shift to a New Faith

I recently started reading The Two-Parent Privilege: How Americans Stopped Getting Married and Started Falling Behind. The book touches on some culture war topics around marriage and gender. It’s written by Melissa Kearney, an economist at the University of Maryland, and she very much goes out of her way to avoid being offensive on these topics. I’m only partway through the book, but as I understand it so far, her main thesis involves three points:

The socioeconomic data clearly shows that babies with two parents do much better than those with only one parent, and

statistically, parents who are married are more likely to stay together than those who don’t, and

working class men in the US have been hit hard economically and aren’t seen as marriageable, further exacerbating inequality and the class divide.

But I think the response to her argument has been more interesting than the argument itself, and the reaction is emblematic of the intense religious faith that we’re seeing in our society.

The Washington Post review writes, “I have no doubt that Kearney is correct, in her limited way, about the data.”

Being correct about the data is a good thing, right?

Well, not exactly. The reviewer accuses Kearney of “breathing the haunted air” and believes that “dangers lurk” in her ideas. The criticism of Kearney’s ideas is that she’s factually correct, but she is not morally correct. Kearney is a social scientist, but her scientific findings must conform to the reviewer’s moral beliefs.

Throughout our culture I see so many people calling themselves atheist and yet subscribing to strict moral codes where certain factual observations cannot be uttered out loud. Even Kearney’s critics agree that what she’s saying is true. But they’re still upset because even though they’re true, they’re angry that she’s saying them out loud. Her data is accurate, but it’s clearly taboo. The book seems like an appropriate thing to review for a blog named Data Taboo. This moralization of statistics is making it difficult for our society to address basic problems we’re facing.

But speaking of marriage…

Eight) I’ve referenced the decline of men going to college and the economic decline for working class men. I predict that women are going to respond by dating and marrying increasingly older men. Here in the US, the age gap was about five years in the late 1800’s and then has slowly declined by a fraction of a year each decade (this is measured on the census) until recently. In 2010 and 2020 it flatlined at 2.3 years. I think we’re going to see this uptick slightly in the 2030 census. If this happens, it’ll be the first reversal of this trend in over a century.

Nine) A surge in AI romance. Unfortunately, I don’t know how I’ll quantify this exactly. I can’t find any statistics on this. But I assume that now it’s probably near zero at the moment. Maybe this will be the profit center that stops an AI Winter?

Ten) I think we’ll see the loneliness epidemic remain stagnant. Suicides in the US were at an all time high last year. The CDC data on suicides and deaths of despair lags by about six months. We really only have non-provisional data for 2022. Other people are saying that they think that suicides and deaths of despair went up during COVID and will drop back to 2019 levels. I’m thinking that 2023 numbers will stay within +/- 2% of 2022 levels. We’ll know for sure in about six months.

III. Anti-Romanticism

During the cultural period of Romanticism in the 1700’s and 1800’s, the cultural focus was on individualism, creativity, and glorification of the past. Art critics talk about our current period as Post-Post Modernism. I’d like to rename that.

Social media creates a risk averse culture. And rather than a period of creativity, like in Romanticism, social media encourages mimetic, conformist behavior. Where even the most creative name that art critics can come up with is Post-Post Modernism. Fashion isn’t really changing. Fiction produced by MFA workshops sounds like it came out of a factory. Films are all derivative. The Writers Guild of America went on strike partly because they knew their writing is so monotonous it can be produced by a machine.

Another aspect of Romanticism was a glorification of the past. In contrast, we live in a time when historical statues are torn down. When people introduce themselves with land acknowledgements to signal that they understand the horrors of the past. Just as the Romantic glorification of the past committed lies of omission (and sometimes just lies) this current demonization of the past also contains omissions and focuses only on aspects of the past that conform to the ideological narrative.

But there’s also a growing pool of writers living off crowdsourced Patreon-style funding. I think that the most interesting art is being produced this way. I don’t think it’s an accident that many of my favorite (living) writers are pseudonymous, like Delicious Tacos. And when Scott Alexander released Unsung he still hadn’t been doxxed by the New York Times. The fact that the NYT felt that they needed to hunt down and dox a non-conformist, pseudonymous writer is probably part of why we live in a time when people feel they have to produce art in secret.

A couple concrete predictions:

Eleven) In the top 10 grossing films of 2024, only one out of ten (at most) will be original. An original film would be something that’s wholly original. Not a re-make or set in a pre-existing world, like Star Wars or Barbie. In the twentieth century, the derivative works were often based on something like Shakespeare but were mostly originals. The top grossing films of 2024 are probably going to be Despicable Me 4, Kung Fu Panda 4, Deadpool 3, Dune: Part Two. A Godzilla movie. A remake of Mean Girls. Maybe a Ghostbusters sequel.

Twelve) A bestselling novel will be credited as written by a LLM by 2027. This will probably be something pieced together by a human and the LLM will be as much of a marketing ploy as anything.

Putting together a couple of your predictions, I wonder if we’ll start to see a shift towards pragmatism in dating and marriage. Anti-romance to go along with anti-Romanticism. Loneliness might drive people to find companions that don’t fit the mold of passionate love that so much of our media tends to idolize. When people can satisfy sexual needs through largely destigmatized services, marriage can look more like the Puritan ideal that’s oriented around comfort and family.

Your emphasis on whatever comes after transformers was also really interesting to me. Rather than a new architecture, do you think there’s any potential for mixture of experts to extend the impact of AI?